bagging machine learning ppt

Choose an Unstable Classifier for Bagging. They are all artistically enhanced with visually stunning color shadow and lighting effects.

Application Of Machine Learning For Advanced Material Prediction And Design Chan Ecomat Wiley Online Library

Bagging Vs Boosting In Machine Learning Geeksforgeeks.

. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. Bootstrap aggregating Each model in the ensemble votes with equal weight Train each model with a random training set Random forests do better than. 172001 25345 AM Document presentation format.

Packaging Machine 1 - A Packaging Machine is used to package products or components. My slides are based on theirs with minor modification Reinforcement Learning ppt Introduction to Machine Learning Lior Rokach Department of Information Systems Engineering Ben-Gurion University of the Negev. Followed by some lesser known scope of supervised learning.

Bagging is a powerful ensemble method that helps to reduce variance and by extension prevent overfitting. Ian Chang Created Date. Reports due on Wednesday April 21 2004 at 1230pm.

Classifier consisting of a collection of tree-structure classifiers. A training set of N examples attributes class label pairs A base learning model eg. Another Approach Instead of training di erent models on same data trainsame modelmultiple times ondi erent.

A decision tree a neural network Training stage. Bagging - Variants Random Forests A variant of bagging proposed by Breiman Its a general class of ensemble building methods using a decision tree as base classifier. Ensemble methods improve model precision by using a group or ensemble of models which when combined outperform individual models.

For b 1 2 B Draw a bootstrapped sample D b. Algorithms such as neural network and decisions trees are example of unstable learning algorithms. Slide explaining the distinction between bagging and boosting while understanding the bias variance trade-off.

Our new CrystalGraphics Chart and Diagram Slides for PowerPoint is a collection of over 1000 impressively designed data-driven chart and editable diagram s guaranteed to impress any audience. Bagging is a powerful ensemble method which helps to reduce variance and by extension prevent overfitting. In the first section of this post we will present the notions of weak and strong learners and we will introduce three main ensemble learning methods.

UMD Computer Science Created Date. The bias-variance trade-off is a challenge we all face while training machine learning algorithms. Main Steps involved in boosting are.

Bagging machine learning ppt Friday April 8 2022 Edit. Bootstrap aggregating Each model in the ensemble votes with equal weight Train each model with a random training set Random forests do better than bagged entropy reducing DTs Bootstrap estimation Repeatedly draw n samples from D For each set of samples estimate a statistic The bootstrap. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting or by averaging to form a final prediction.

The concept behind bagging is to combine the prediction of several base learners to create a more accurate output. Bagging is used with decision trees where it significantly raises the stability of models in improving accuracy and reducing variance which eliminates the challenge of overfitting. Followed by some lesser known scope of supervised learning.

Bagging and Boosting 3 Ensembles. Ad Andrew Ngs popular introduction to Machine Learning fundamentals. Intro AI Ensembles The Bagging Model Regression Classification.

Train model A on the whole set. Bagging and Boosting 3. Packaging machines also include related machinery for sorting counting and accumulating.

Bootstrap aggregation Bootstrap aggregation also known as bagging is a powerful ensemble method that was proposed to prevent overfitting. Trees Intro AI Ensembles The Bagging Algorithm For Obtain bootstrap sample from the training data Build a model from bootstrap data Given data. Then understanding the effect of threshold on classification accuracy.

Then in the second section we will be focused on bagging and we will discuss notions such that bootstrapping bagging and random forests. Furthermore there are more and more techniques apply machine learning as a solution. The bagging technique is useful for both regression and statistical classification.

Understanding the effect of tree split metric in deciding feature importance. Richard F Maclin Last modified by. The first step builds the model the learners and the second generates fitted values.

Vote over classifier. In the future machine learning will play an important role in our daily life. Checkout this page to get all sort of ppt page links associated with bagging and boosting in machine learning ppt.

Bagging and Boosting CS 2750 Machine Learning Administrative announcements Term projects. Deep Learning DL Subset of ML in which artificial neural networks adopt and learn from vast amount of data. Such a meta-estimator can typically be used as a way to reduce the variance of a.

Bayes optimal classifier is an ensemble learner Bagging. Train a sequence of T base models on T different sampling distributions defined upon the training set D A sample distribution Dt for building the model t is. The bagging algorithm builds N trees in parallel with N randomly generated datasets with.

Another Approach Instead of training di erent models on same. Bagging and Boosting 6. Each tree grown with a random vector Vk where k 1L are independent and statistically distributed.

The meta-algorithm which is a special case of the model averaging was originally designed for classification and is usually applied to decision tree models but it can be used with any type of. ML Bagging classifier. Ensemble Mechanisms - Components Ensemble Mechanisms - Combiners Bagging Weak Learning Boosting - Ada Boosting - Arcing Some Results - BP C45 Components Some Theories on.

Machine Learning CS771A Ensemble Methods. Hypothesis Space Variable size nonparametric. This product area includes equipment that forms fills seals wraps cleans and packages at different levels of automation.

Bayes optimal classifier is an ensemble learner Bagging. Times New Roman Arial Default Design MathType 50 Equation Bitmap Image Sparse vs. Can model any function if you use an appropriate predictor eg.

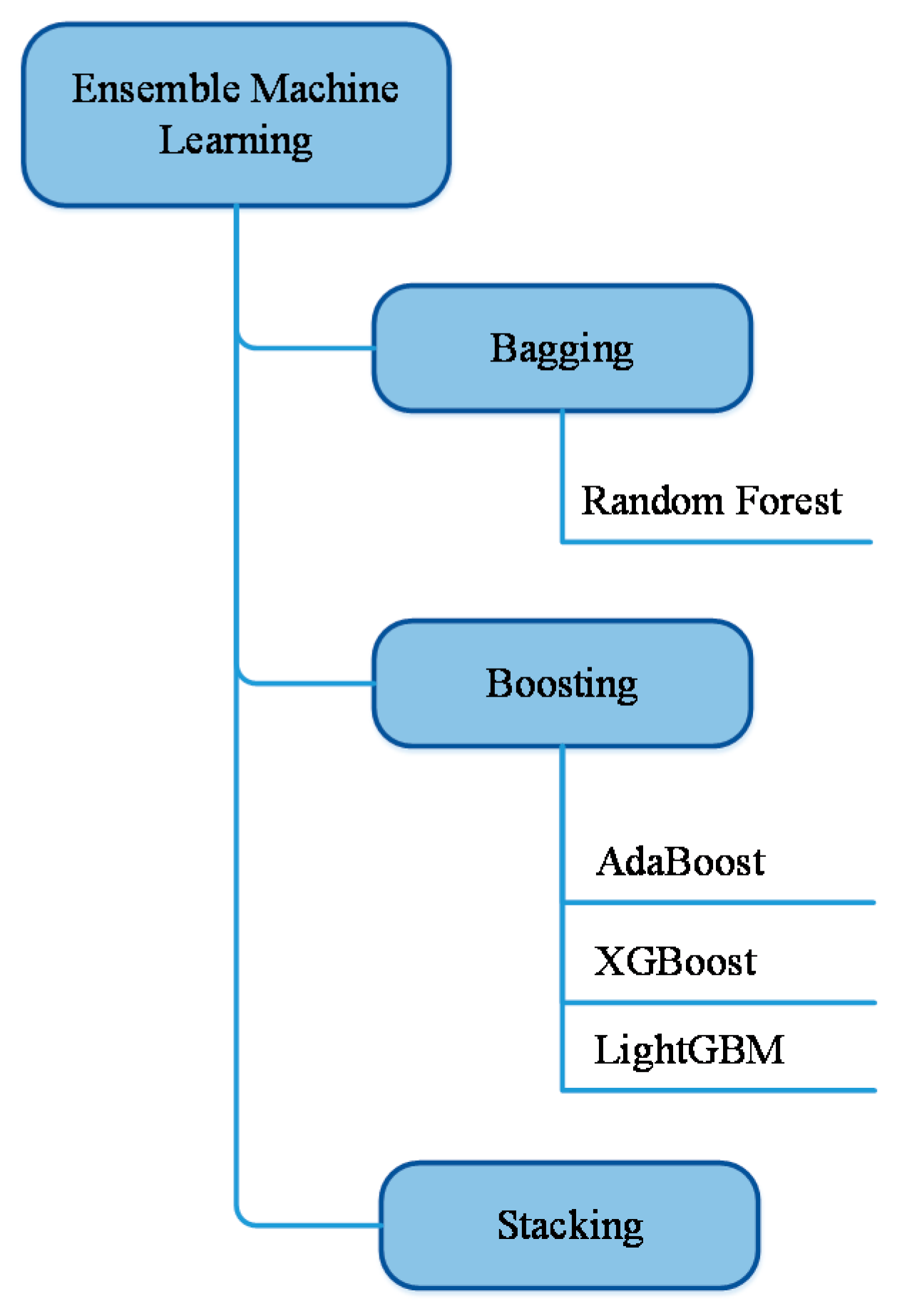

Ensemble machine learning can be mainly categorized into bagging and boosting. Machine Learning CS771A Ensemble Methods. PowerPoint 簡報 Last.

BaggingBreiman 1996 a name derived from bootstrap aggregation was the first effective method of ensemble learning and is one of the simplest methods of arching 1. Bagging boosting and stacking. Train the model B with exaggerated data on the regions in which A.

Bagging bootstrap aggregation Adaboost Random forest. Lets assume we have a sample dataset of 1000 instances x and we are using the CART algorithm. Random Forests An ensemble of decision tree DT classi ers.

11 CS 2750 Machine Learning AdaBoost Given. Ensemble methods improve model precision by using a group of models which when combined outperform individual models when used separately. Many of them are also animated.

Given a training dataset D x n y n n 1 N and a separate test set T x t t 1 T we build and deploy a bagging model with the following procedure.

Using Machine Learning To Improve Our Understanding Of Injury Risk And Prediction In Elite Male Youth Football Players Journal Of Science And Medicine In Sport

Lecture 18 Bagging And Boosting Ppt Download

Industrial Balers And Compactors Walmart Waste Recycling

Accomplishments Diagram For Powerpoint Slidemodel Accomplishment Powerpoint Powerpoint Design

Businessman Pointing At A Screen Free Vector Vector Free Business Cartoons Teacher Cartoon

Improving The Quality Of Care In Radiation Oncology Using Artificial Intelligence Clinical Oncology

Reporting Of Prognostic Clinical Prediction Models Based On Machine Learning Methods In Oncology Needs To Be Improved Journal Of Clinical Epidemiology

Confusion Matrix In Machine Learning Confusion Matrix Explained With Example Simplilearn Youtube

A Machine Learning Algorithm Can Optimize The Day Of Trigger To Improve In Vitro Fertilization Outcomes Fertility And Sterility

一文讲解特征工程 经典外文ppt及中文解析 云 社区 腾讯云 Machine Learning Learning Development

Ai Applications To Medical Images From Machine Learning To Deep Learning Physica Medica European Journal Of Medical Physics

Development Of A Novel Potentially Universal Machine Learning Algorithm For Prediction Of Complications After Total Hip Arthroplasty The Journal Of Arthroplasty

Ensemble Learning Bagging And Gradient Boosting From The Genesis

Tuning Hyperparameters Of Machine Learning Algorithms And Deep Neural Networks Using Metaheuristics A Bioinformatics Study On Biomedical And Biological Cases Sciencedirect

Mathematics Free Full Text A Comparative Performance Assessment Of Ensemble Learning For Credit Scoring Html

Visuals For Ai Machine Learning Presentations Ppt Template Machine Learning Machine Learning Deep Learning Ai Machine Learning

Sdm6a A Web Based Integrative Machine Learning Framework For Predicting 6ma Sites In The Rice Genome Molecular Therapy Nucleic Acids